I’ve been busy the last couple of months! Aside from 35 meetings and some interesting work with Beam and Save the Children, I spent about 200 hours on my first social impact project. I’ve been building a chatbot to raise money for the Against Malaria Foundation so they can buy more bed nets to protect people in sub saharan Africa from malaria-infected mosquitoes at night.

A chatbot is an automated conversational experience. Facebook have a platform that enables developers to build chatbots within Facebook Messenger. They’re like apps, but you don’t have to install them. They just work inside Messenger.

Who Are The Against Malaria Foundation?

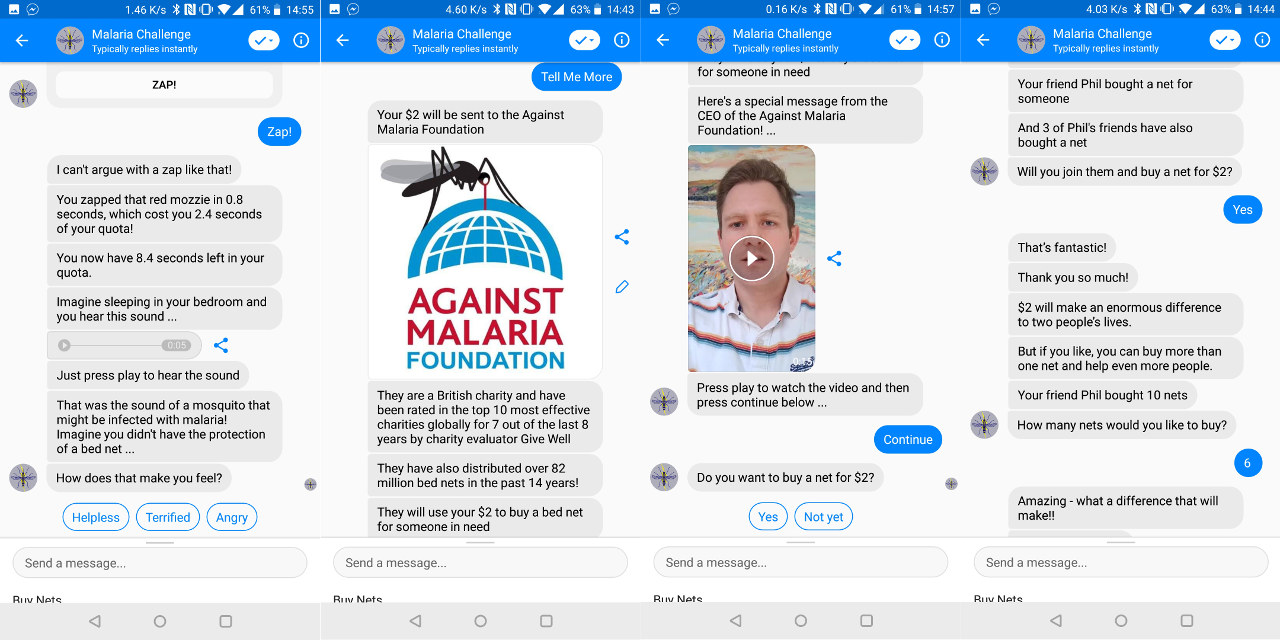

The Against Malaria Foundation are one of the most effective charities globally, as rated by charity evaluator Give Well. They are only a team of six people. But they have distributed over 82 million bed nets over the past 14 years. A long-lasting insecticide-treated bed net protects two people for three years from malaria-infected mosquitoes at night. And they only cost about £1.50 each! They have a funding need of over £100 million. This means that people in sub saharan Africa will be sleeping unprotected at night unless people donate money. So for a small amount of money, we can make a big difference in people’s lives.

Despite being such a small team distributing millions of bed nets every year, the Against Malaria Foundation actually track every bed net distribution to within six metres. They achieve this using technology. This ensures that nets reach their destinations and it keeps the governments they work with accountable. But it also makes them quite a strategic partner as we could do more interesting things together in the future. Such as letting a donor know exactly which village or maybe even person they’ve helped!

Project Status

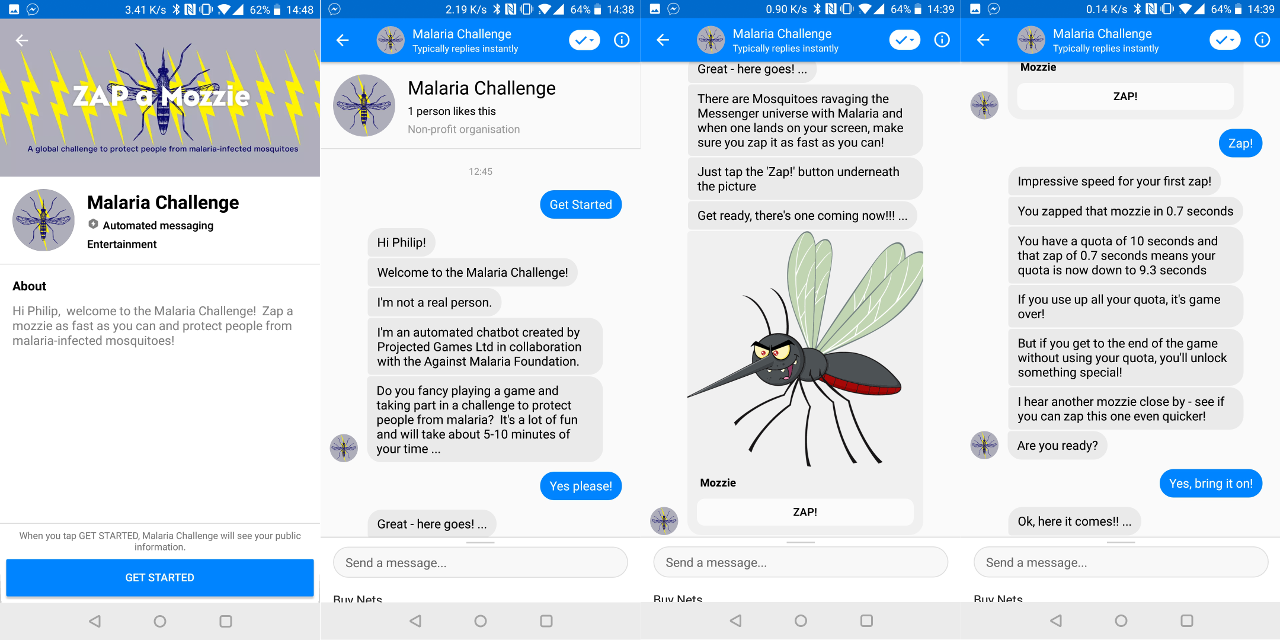

After 10,000 lines of code, 40 user tests and five big iterations of the product, it’s ready for live beta testing. Facebook have approved it and payments work seamlessly in 135 different currencies (using Stripe). Here are some screenshots of the experience (a full video run-through is included towards the end of the blog post):

I’d love to share the live product with you all now, but I still need to work with the Against Malaria Foundation on a few things. For example, we need to connect their bank account (rather than mine!) and I need a few videos from the CEO to replace mine in the chatbot.

So in the meantime, I thought I’d share the journey I went through in building this chatbot …

Project Goal

The goal of this project is two-fold:

- Persuade people to buy at least one bed net for about £1.50

- Get people to invite and persuade their friends to buy bed nets

If things go really well, this chatbot will go viral and raise millions of pounds for the Against Malaria Foundation. But it’s a big if!

Why a Chatbot?

If our goal is to raise money for the Against Malaria Foundation, why build a chatbot? Or why even build a digital product?

Why a Digital Product?

Digital products are expensive to build, but they scale really nicely.

This project has consumed about 200 hours of my time. And I’ve not launched a first version yet! That’s fairly typical of digital projects. Moreover, once they do launch, most consumer products fail. Their success typically requires many people to use the product and engage in the way the creator intends (in this case to buy bed nets and share with their friends). It’s hard to drive user behaviour (because people have short attention spans and they’re busy). So the chances of success are typically quite low.

But if they do work, they scale incredibly well. Imagine this project worked and went viral. All it would take is for say, 1 in 5 people who get an invite to the chatbot to buy a net and each one of them inviting more than 5 people. If those metrics sustain, it will go viral and likely raise millions of pounds. It’s hard, but not impossible. If that happens and let’s say we raise £50 million in total, then my return on time invested is £250,000 raised per hour. So every hour of my time would result in over 300,000 people being protected from malaria-infected mosquitoes at night. So it’s worth a shot!

And why a chatbot over an app or a web-based experience?

A chatbot vs an app

Well, first of all chatbots are easy to access. In one tap, you’re instantly inside the chatbot, enjoying the experience. With an app it requires an install, which can be time-consuming and can eat up people’s mobile data and space on their phone. This causes a lot of people to “drop off” before getting to the experience. It turns out that this is a big deal when you’re trying to build viral products.

Let’s say that 80% of people drop off when installing an app (vs. no-one for the chatbot). We’d then have to improve our metrics by 5x to achieve virality. So for example, 1 in 2 people who install the app would have to buy a net and each one of them would have to invite more than 10 people. That’s much harder to achieve!

A chatbot vs a web experience

What about a web experience? It’s true that web experiences don’t require an install, but it is also very hard to reconnect with users. This means that you can provide an experience the first time a user visits your web page, but once they leave you won’t be able to engage them again. This is partly because you have no easy way of reaching them and also because you have no easy and reliable way of remembering who they are.

Chatbots solve both of these problems. Once a user enters a chatbot, you’ll always know who they are when they come back. And you can send them messages to keep them engaged after the first interaction. These properties will give us a much better chance of achieving our goals.

Other nice properties of chatbots

Chatbots are also really easy and natural to use. This is because they are so similar to how normal Messenger conversations work, which everyone is familiar with. As chatbots are so new, there is also a decent possibility for lots of free traffic. For example, Facebook have a “Discover Tab” for people to find cool chatbots they might like within Messenger. Given the social impact of this project, there is a possibility of getting featured here, which might drive a LOT of traffic. They’re also a new and novel experience for most users. This may make people more likely to try it out and engage with it compared to a website or an app.

Chatbots are actually quite a bit easier to build than apps and websites too. This is because you don’t have to worry about the User Interface (UI). The Messenger Platform comes with a bunch of predefined templates to build out a chatbot. While this can be limiting in what you are able to do, it also means that you don’t have to spend much time on the look-and-feel. Instead, it just involves writing backend code to render the right components at the right time. This actually speeds up development significantly.

Some Prior Chatbot Success

Finally, I have some prior experience building a chatbot that has had some traction. This gives me more confidence in the platform. It’s a game called Whack-a-Mole, whereby moles pop up throughout the day inside Messenger and you have to “whack” them as fast as you can. I launched it in April 2017 and it has grown to over 28,000 players (mostly through players inviting their friends to play). 400 people have been whacking moles most days for over a year! I have about 1,000 daily active players today. And over 5 million moles have been whacked to-date!

My Relevant Experience

I’m not coming at this project completely cold. In addition to my experience building Whack-a-Mole, I have a number of other experiences that should help increase the likelihood of success.

I worked on the Messenger Platform team at Facebook for a year. So I’m familiar with the platform and worked with a number of companies in building out their chatbots. The chatbot I’ve been working on is a game and I worked on the Games Team at Facebook for a couple of years. This has given me some understanding of how to build engaging games. I’ve focused on mobile technologies and products for the past 8 years too, which helps for this project.

I have quite a bit of software engineering and product experience. This gives me the independence to build this on my own without relying on a developer to assist me. I have some gaps, such as design, but I’ve been fortunate to find people along the way who have been able to help me.

This project (and I’m sure many more in the future) relies on partnerships with other organisations. In this case, the Against Malaria Foundation. I worked in a partnerships role for 8 years at Google, Facebook and Monzo, which should help.

Finally, I was part of Tearfund’s fundraising committee for 8 years. This gave me good exposure to the dynamics of fundraising and some insight into donor psychology.

General Approach to Building a Chatbot

If I had to sum up my approach to building products, it would be to build something as fast as possible and put it in front of users to see how they behave. Then iterate based on what I learn.

The Silicon Valley Way

I didn’t make this up. This is the conventional wisdom of Silicon Valley today. Paul Graham talks about this as his #1 lesson in his essay on startup lessons – release early. That means to put something in front of people as soon as possible so you can learn and iterate as fast as possible.

The most recommended book at the moment for product leaders in tech startups is Inspired by Marty Cagan. I read it a couple of months ago and a big part of the focus is on the process and techniques for product discovery and prototyping. This is essentially the same thing as building something and putting it in front of users to see how they behave.

Prototypes vs Working Products

That’s essentially my approach to this project. But instead of putting prototypes in front of users, I built a working chatbot. Prototypes are typically a series of screenshots of what the product will look like, whereby some of the buttons in the screenshots are tappable and take you to the next one. This makes the experience look and feel like a real product. Marvel is a popular technology for building prototypes.

The benefit of building prototypes over working products is that they are usually much quicker to build. This lets product teams learn and iterate much quicker. But as I am familiar with the Messenger Platform and can repurpose code from my previous project, Whack-a-Mole, it was just as quick for me to build an actual product rather than a prototype.

I went through five iterations in building out this chatbot. After each iteration, I put it in front of a few users and watched how they interacted and listened to what they said. Based on what I learnt, I then made some changes to produce the next iteration.

Let me walk through the process of building out each iteration, how I conducted my user tests, what I learnt and how I applied that learning in the next iteration.

Iteration 1 (20 hours)

Preparation

On October 17th, two days before a hackathon, I started pulling together some foundational code for the project. I copied the entire code base for Whack-a-Mole and stripped it right down from 16,000 lines of code to about 2,000. This gave me the common components I would need to get started. Such as being able to process incoming messages and respond with different message formats, save and retrieve data from the database and have a robust integration with the Messenger Platform. I also put together a simple guide to help other developers who joined my project at the hackathon get started quickly.

The Hackathon

That weekend, Kingdom Code (a community of Christian technologists) ran a 24-hour hackathon at a church called St John’s Hoxton in East London. They brought together about 60 people to build out projects focused on inspiring Christians to give more generously and helping Christians to engage more deeply with the Bible. I ran a project to build a first version of this chatbot.

After pitching to the group, I had five people join me for my project – a designer and four developers. Our designer, David Sorley (who runs his own creative agency called Boxhead), came up with the initial concept of zapping mosquitoes (“mozzies”) as fast as possible, interspersed with information about malaria. I wasn’t so sure at first as it was so similar to Whack-a-Mole, but the rest of the team liked it so we moved forwards. It turned out to work really well! And it had the added benefit that we could repurpose a bit more of the Whack-a-Mole code base. This gave us a further head start on the project.

After about 6 hours of “hacking” (writing code quickly, even if a little messy) we had a working demo, which we showed to the group in the final presentations, hosted at the Monzo offices. Here’s what we produced in 24 hours and demoed:

We only successfully addressed the first of our two goals – trying to persuade people to buy a bed net.

Our Approach

Let me unpack our approach a little more here.

In order to persuade someone to enter their credit card details and pay money, we’d have to convince them that the cause was worthwhile and legitimate.

Convincing people on the worthiness of the cause involved education. We had to educate them on what malaria is, why it’s so bad and how cost-effectively we can help people. But just feeding people this information would be a little boring and unlikely to be very engaging. That’s where zapping mozzies came in! We interspersed facts about malaria with mozzies that people had to zap as fast as possible. If they were too slow, it would be game over. This fun part of the chatbot would more likely keep people engaged for longer so we had the time to educate them about malaria.

We also introduced a question for the user so they would be further engaged with the experience. And we tried to give the user some experience of what it is like having mosquitoes coming at them all the time so they could potentially build some empathy with the people we were trying to help in sub saharan Africa. The mozzies coming at them helped with this, as did other aspects like the sound of a mosquito.

Finally, we tried to demonstrate the legitimacy of the cause by showing videos of the CEO of the Against Malaria Foundation (which is where their money would go). Showing these video (and audio) clips further helped to make the experience more engaging.

Iteration 2 (20 hours)

User Testing Goals

After about 20 hours of work (3 days), I had a first version to show people! Now for some user testing. My goals with user testing were two fold:

- Is the product “valuable”? i.e. In my case, is the experience sufficient to persuade them to part with £1.50?

- Is the product “usable”? e.g. Do people get stuck or confused at any point? And are they able to use or consume all parts of the experience intended for them?

My Approach to User Testing

Now, if you read up on how to do proper “user testing” or you happen to have some experience in user testing, you’ll know that it is important to:

- Talk to your target users. This involves recruiting the right people carefully, often with the help of an agency.

- Spend plenty of time with them. First ask them open questions and then show the full experience end-to-end. This will often require incentivising them for their time.

- Be careful not to bias them by watching their behaviour but not leading them in any way and using other techniques.

But I had a couple of constraints:

- I don’t want to spend any money

- I am one person with not much time and want to move as fast as possible

The general rule of thumb with user testing is that you only need to test with 5-7 people to start seeing trends. Moreover, as I hadn’t tested this with any users properly yet, I anticipated there would be a lot of “basic” feedback and things to work on for a second iteration. And on “target users”, for this chatbot to be successful I would need a lot of users, so my target audience is actually quite broad.

So given all of these considerations, my approach was simply to show it to people who I happened to have meetings with the week after the hackathon. I met with six people and showed them each the chatbot, watched their behaviour and listened to what they said.

Learnings from User Testing Iteration 1

Here are the key themes from the people I spoke with:

- People loved the audio and videos

- Surprisingly some people wanted the option to buy more than one net

- Everyone tapped “yes” to knowing what malaria is

I also spoke with a friend who used to work in door-to-door sales for charities. He shared the techniques he was taught and used. Such as asking people to donate and if they say “no”, giving them more facts and asking again. This is called an “overturn” and can be done two or three times. Also, establish some interest before asking them to donate. And when someone says “yes” to donating, tell them how amazing they are and make it as easy as possible to then donate.

In answer to my questions on value and usability, the user tests were quite positive. I had some positive signals to suggest that some people would buy nets. And there were very few usability issues (and those that existed were easy to mitigate).

Changes for Iteration 2

So in addition to the meetings I had that week, I worked on a second iteration. It was simple enough incorporating this feedback. Moreover, the version we built in the hackathon was quite basic and needed more work. My main focus was on two parts:

- Improve the script to introduce more facts about malaria in a flow that made sense. And remove the question on what malaria is. I researched facts from the WHO and CDC. I also built out the flow if people tapped “no” to buying a net over and over so they would keep seeing new facts and eventually go into an infinite loop of mozzies.

- Build out the donation part to incorporate my friend’s feedback above and allow people to buy more than one net. I even built a simple screen to let people enter their credit card details so I could further test out this part with people (to get a better signal on whether they would actually donate).

The first iteration was demo-ready, but not really product-ready. So the focus of the second iteration was really just to make the end-to-end experience work properly so it could be properly tested.

I was attending a conference the following weekend called EA Global in London. It was the annual gathering of about 600 “effective altruists” who are focused on figuring out how they can do the most good in the world. I assumed they may be the perfect target audience for my chatbot (although to achieve my goals it would need much broader use, but this audience could be a good starting point). So I rushed to get the second iteration complete by Friday!

Iteration 3 (24 hours)

User Testing Iteration 2 – An Engagement Issue

At the EA Global conference I managed to test the chatbot with 12 people. I shamelessly pulled out my phone within minutes of meeting someone new and asked them if they wouldn’t mind trying it out!

The feedback I received on this iteration was incredibly important and a big theme emerged. People liked the zapping game and being drip-fed information between zaps. But they lost interest after about the third zap and weren’t sufficiently engaged to reach the end where they had the opportunity to donate.

Admittedly, the first time I ask someone to donate in this new iteration was after seven zaps. This is compared to just three zaps on the first iteration. But that’s because the first iteration didn’t provide enough facts to educate someone about malaria to the point where they may be convinced to donate.

Solving the Engagement Issue

So there were two ways to solve this problem. Either reduce the time before you first ask someone to donate, or keep them engaged for longer. I preferred the second option as you need enough time to share enough information to convince someone to donate in the first place. So how do you keep someone engaged for longer?

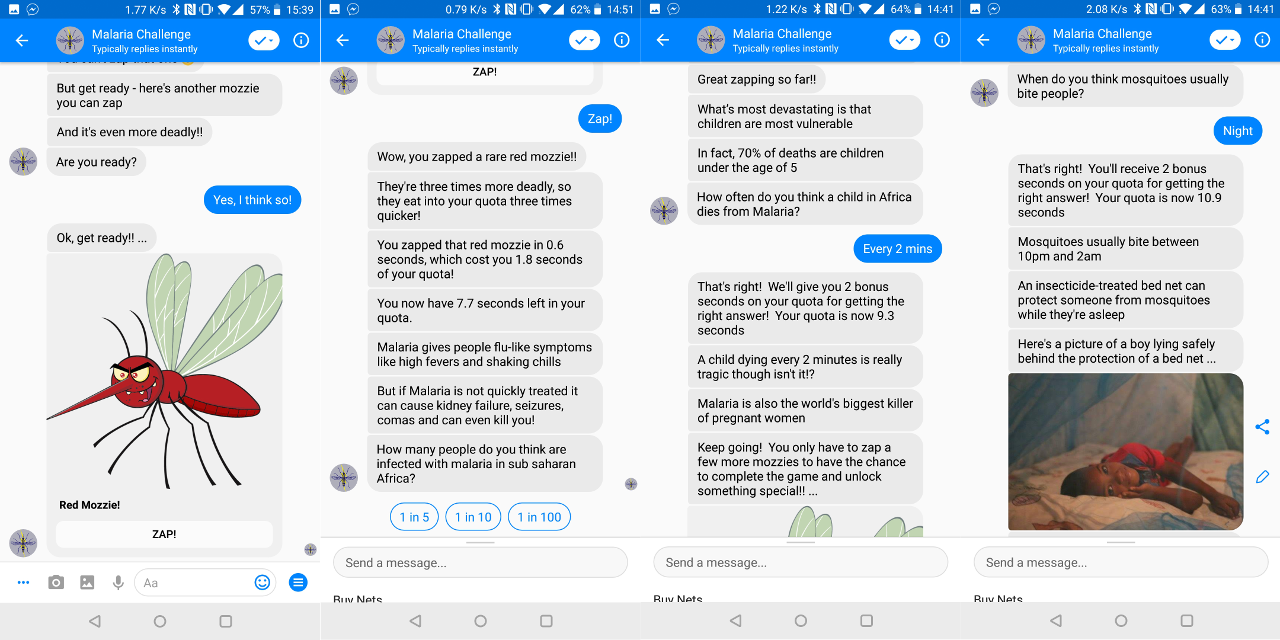

Fortunately, plenty of ideas came out in these user tests too. One idea was a different coloured mozzie, such as a red mozzie. This could be more deadly so that you have to zap it even quicker. This is similar in concept to the different coloured moles I introduced in Whack-a-Mole. Another idea was to introduce questions to engage people more during the experience.

And then the best idea emerged in my final user test with a guy called Sanjay just as I was leaving the conference. The idea of having a quota of 10 seconds and each zap eats into your quota. So instead of having to zap all mosquitoes in a certain time, each zap will eat into your quota and you have to reach the end of the game with some of your quota intact. It turns out that this wasn’t Sanjay’s idea, but it was what I thought he said (I think he meant something slightly different). Anyway, when I repeated this idea to him he liked it.

So these were the three main concepts I wove into the third iteration. A quota of 10 seconds, more deadly red mozzies and questions peppered in amongst the facts and mozzies.

Addressing other Learnings from User Testing

The questions had another added benefit that addressed another common bit of feedback I heard. A few people felt the experience was too fast and they didn’t have time to read all the facts and information. But a few people felt it was too slow! How do you address that!? Well, I sped it up slightly so I would keep those that wanted it faster engaged. But by having questions between each zap, there was now a natural pause to let those that wanted it slower to catch-up. And I introduced a couple of pauses between zaps towards the start of the game too before I introduced the questions.

A few other really helpful bits of feedback came out too that were easier to address. The timings of the zaps needed tweaking (to be a bit harder) as well as the variety of language in response to zaps (to try and avoid a user seeing the same phrase twice). Some people found some of the language too extreme at times, so this needed softening. Most people found the statistic about children most compelling, so I brought this towards the start of the experience. I was reluctant to do this as I felt it might “guilt-trip” people, but that wasn’t the case.

I also had to better deal with textual input a user might type when they first started playing with it. And finally, everyone tried tapping the picture of the mozzie to zap it the first time, but took time to find the actual “Zap” button. So I added some grace to the speed of their first zap and directed them to tap the button instead of the picture.

One Final Learning

One final, surprising learning came out. Effective Altruists are not a good target audience for this chatbot. Many people I spoke with at the conference plan their giving in advance, which means they wouldn’t have donated spontaneously through the chatbot. This was really useful to understand!

Iteration 4 (24 hours)

Goal & Approach for User Testing Iteration 3

I thought that iteration 3 was pretty solid and almost there. But it did introduce a few new concepts such as a quota, red mozzies and questions. So the goal of user testing this iteration was to see if these concepts kept people engaged until they were asked to donate. And also to tweak anything that didn’t work quite right.

I was fortunate that David Sorley, the designer who worked on my project at the hackathon, offered to do some user testing with people in Leeds where he worked. He recorded videos of the screen as people interacted with the chatbot (with audio for what the person was saying) and sent them to me so I could observe. He kindly did six user tests for me. I also tested it with 14 people who I had meetings with over the next couple of weeks. So in total we did 20 user tests at this stage of the project.

A Series of Small Iterations and Rapid Testing

As the feedback for this iteration was more about tweaks and smaller changes, I made these changes between user tests. So iteration 4 was really a sequence of small iterations, testing the new version with the next person. At one point, David sent me a video of a user test from Leeds with feedback that the typing indicator that pops up when the chatbot is writing messages makes the text jump up and down and difficult to read. I had heard this feedback from a few other people too, but not acted on it yet. So I went and made a change in 10 minutes that fixed this issue in time for David’s next user test 30 minutes later! His feedback about this change was then very positive 😊

Tweaks Made Based on the Learnings

One other key piece of feedback, which I continue to hear, is that the game still feels a bit too long before asking people to donate. I added a small change to the start of the chatbot to set people’s expectations that it will take 5-10 minutes of their time, which I think has helped somewhat. But I’m now at the point where I’d like to see real data of people using it to see if they actually drop off or not before getting to the donation ask. So I’ve decided not to tweak further until it is live and iterate after that.

I made some small tweaks based on other feedback. Some people wondered if the Against Malaria Foundation was legit. So I added some extra information about them. Some people ran out of quota. So I rewarded people with extra quota seconds for getting a question right. This has the added benefit of making the questions and the game more engaging. I dealt better with some textual inputs that people were typing. Someone said that it wasn’t clear that it was an automated chatbot and felt like it could be a person harassing them with rapid typing! So I addressed this with a short explanation up front. And I further tweaked the information and timings of the messages.

Malaria Challenge vs. Zap-a-Mozzie

We even tested the name of the chatbot with a few people. We were trying to decide whether to call it the “Malaria Challenge” or “Zap-a-Mozzie”.

David and I were leaning towards the “Malaria Challenge” because only the first part of the game was about zapping mozzies. Once a user purchased a net, the next part of the game was about climbing the leaderboard by inviting your friends to buy nets and protecting more people.

David ran this by a few users who were testing the chatbot and they also preferred the name “Malaria Challenge”. Decision made 😊

Payments and Post-Purchase Flow

But most of the work for this iteration was cleaning up my code from the hackathon and then building out the payment and post-purchase flows to complete the end-to-end experience.

I won’t go into the code cleanup, but this took a few hours. The payment flow was necessary so that people could actually pay. I then had to build the post-purchase flow to tackle the second goal for this project, which was to convince people to persuade their friends to buy nets. User testing this second goal though would be much harder without a live product as people would want to share with their own friends. They could only do this on their own Facebook Messenger account on their phone. So I’ll need to wait till it’s live to user test this. But I needed to build the basics to have it launch-ready.

Building the Payment Flow with Stripe

It took me about 12 hours to build out a payment flow with Stripe, my preferred platform for online payments. This was my first time implementing Stripe. It involved taking one of their pre-created pixel-perfect checkout flows and modifying it inline with the branding of the Against Malaria Foundation. And then stripping out fields I don’t need. I then had to make sure it rendered well inside a webview in Messenger. Finally, I used Messenger Extensions to make this load more nicely and to enable me to close the webview automatically after a user makes a payment so I can take them back into the chatbot. This took about 4 hours in total.

Once the User Interface (UI) was complete, I then worked on the logic of processing payments using Stripe’s API. It was actually pretty straight forward and involved writing some client-side Javascript code to capture the necessary information from the checkout form and pass it to my server to process using Node.js code. That part took about 8 hours.

Building the Post-Purchase Flow & Leaderboard

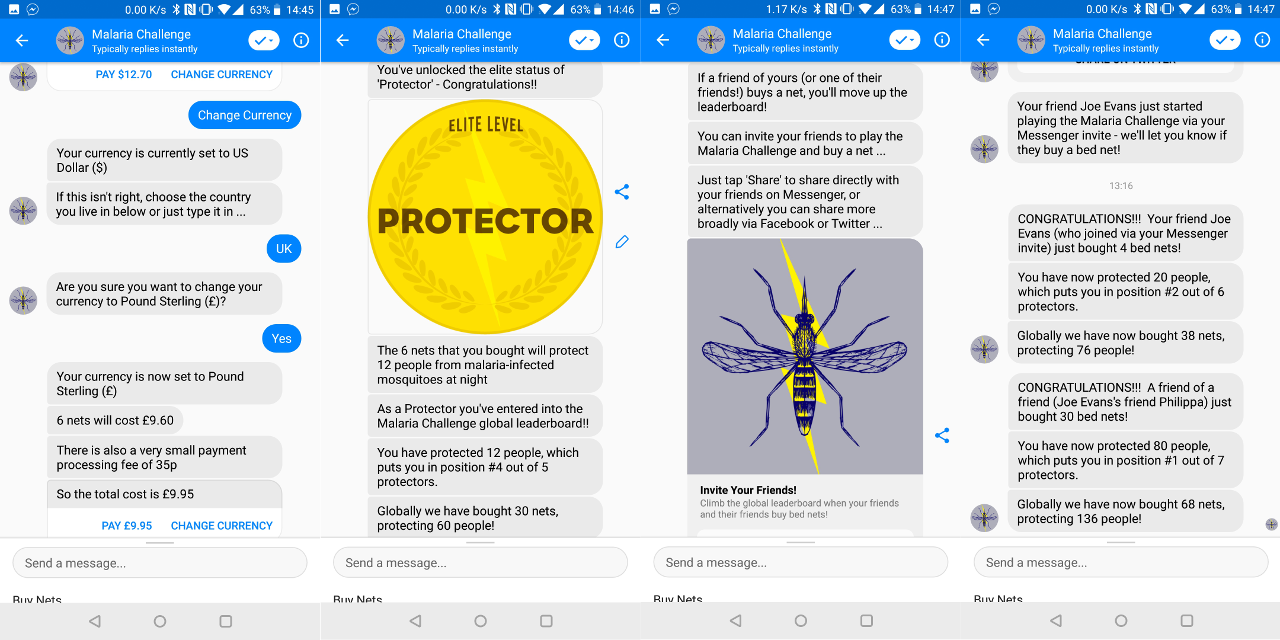

Finally, I worked on the post-purchase flow by building out the concept of a global leaderboard of “Protectors”. Once someone buys a net, they have protected 2 people and will be towards the bottom of the global leaderboard. If they buy 10 nets, they will have protected 20 people and will be a little higher up. But if they invite their friends to the chatbot and they buy nets, that will count towards their position on the leaderboard.

For example, if their friend buys 20 nets, which protects 40 people, they will both move up the leaderboard by 40 lives protected. The same goes for friends of friends (but stops there, otherwise you can never get ahead of the person who invited you!). Each time you protect more lives, you’ll also get feedback on the global effort and how many lives have been protected overall.

I repurposed a bit of code from Whack-a-Mole that does something similar. When I tested it out with a few test users, I was pleasantly surprised by the results. The effect of seeing real-time when your friends start using the chatbot and buy nets is quite powerful. I’m excited to see this live and the effect it will have on people sharing with their friends. I expect I’ll need to further improve this flow, but I’ll iterate on this once it’s live and I can observe people’s actual behaviour.

Launch-Ready

The latter user tests yielded generally positive feedback and there wasn’t much consistency between them. This is a good sign. It means that it’s in pretty good shape and probably ready for live testing.

So in an ideal world, I would launch at this point and observe actual behaviour of people using this product independently. I knew at this stage that the risk of this not working (i.e. going viral and raising millions of pounds!) was quite high. So launching now rather than later would make sense so I don’t waste time on it if it doesn’t work. And it’s only 29 days since I started working on it (or 12 working days).

Dependencies with Facebook & the Against Malaria Foundation

So I submitted the chatbot to Facebook for review as they need to approve it before it can go live. They approved it in less than 24 hours 😊

I also sent an email to the Against Malaria Foundation to summarise the project, progress and actions I need them to take. For example, I need them to setup a Stripe account connected to their bank account. And I need the CEO to record three videos to replace my “fake” videos in the chatbot. It would be great if they were also able to link to the chatbot from their website to improve its legitimacy. Plus send a follow-up email to thank users after they donate. Given my lack of experience in the charity sector, there may be other things we need to do too that I haven’t yet considered!

As I wait to finalise these items with the Against Malaria Foundation, I had a choice of moving on to the next project or continuing to iterate based on the feedback I’ve heard. As my head is so deep in this project and I had so much momentum, I decided to carry on. So I built out another iteration to get the chatbot into even better shape when it launches …

Iteration 5 (86 hours)

The main work for this iteration was focused on the payments part. Specifically, supporting other currencies and introducing the necessary payment processing fee which is unavoidable.

So far I hard-coded Pound Sterling (£) as the only currency in the chatbot. But I have friends in the US who would want to try it out as soon as it’s ready. I have another friend who wants to invite her friends in South Africa to try the chatbot. Given the fact that sharing is at the heart of the experience and how globally connected I and my friends are, it is likely that many different currencies will need to be supported.

Now it would still work for users in the US and South Africa. But costs would all be shown in Pound Sterling, which might be a bit confusing for people. It would also involve a real-time currency-conversion, which means the amount someone is paying would be unclear. I assumed the work of supporting a few more currencies would only take a day or two. But then I thought it may not be too much extra work to support all the currencies (!) … or at least the 135 currencies that Stripe supports.

The Hard Work of Rendering Currencies (and Countries)

My assumption wasn’t entirely accurate though. Stripe does take care of all the heavy lifting of the payment process in different currencies. But a large part of the complexity for me was rendering the currency correctly for the user. This turned out to be non-trivial!

The Complexity of Rendering Currencies

A net costs £1.60 for someone in the UK. It is $2 for someone in the US. The only difference in rendering these amounts is the symbol used. £ for the former and $ for the latter (and the actual amount of course).

But what about someone in France? Well, the cost is 1,80 €. Notice here that the symbol now appears after the amount rather than before. Notice too that the decimal separator is no longer a “.” but is a “,” instead. The thousand separator isn’t always a “,” in all currencies either – it is sometimes a “.” (usually the opposite of the decimal separator), but can also sometimes be a “ “ (space).

But it gets even more complicated! While most currencies have two decimal places, some have none and others have three! Currencies have a different symbol for the lower denominator like “¢” and “p”. Even for the same currency, different countries have different conventions. For example, while France displays the € after the amount, Denmark displays it before the amount.

Different currencies sometimes use the same symbols too. For example, 23 different currencies use the $ symbol, including USD (currency used in the US and 16 other countries), AUD (currency used in Australia and 7 other countries) and CAD (Canada). So it’s important to be clear what currency the user is using if they are unsure.

Some Extra Complexity to Render Country Names

Finally, when people switch currencies, the best way to help them do this is to ask them which country they live in. This meant that I needed to render country names correctly. It might be strange to show the option of “United States of America” to a US citizen. Instead it might be better to say “US”. This is both more concise and feels more natural in the conversational experience.

Moreover, if this is mentioned in a sentence, such as “Do you live in US?”, the country in this case should be prefixed with “the”. It should read “Do you live in the US?”. It turns out there are 35 country names that should be prefixed with “the” in a sentence. So I needed to find out what these are and ingest them into my database. I needed to do the same for the four countries that should have their names abbreviated. Namely, the US, the UK, the UAE and the DRC.

Building a Rendering Solution

And all of this logic needs to be implemented just to render the currencies and country names correctly! Most of this work is getting the information for all the countries. I couldn’t find any single resource that had all this information in one place, so I had to use about 7 or 8 different websites to get this information and build 7 or 8 different scripts to ingest this into my database!

The best resource that I found was at geonames.org. This had a lot of the basic information for all the countries. I used a node.js library called currency-symbol-map to get the currency symbols. Then I used a whole variety of websites to get the lower denomination symbols, decimal separators, thousand separators, number of decimal places, full currency name, whether the symbol appears before or after the amount and which country names should be abbreviated and prefixed with “the”!

This whole effort probably took about 30 hours! And I know that sharing is caring, so here’s the full data set in JSON format for those of you who are developers reading my blog. And to make it really easy for you, if you’re using MongoDB as your database (as I am), just import this directly into your database using this command:

mongoimport -h <database host> -d <database name> -c countries -u <mongo username> -p <mongo password> --file <countries.json file downloaded from link>

Other Work to Support All The Currencies

Ok, so we can now render all the currencies in the world. What else do we need to do to robustly support them all?

Stripe-Related Work

Well, Stripe doesn’t support all the currencies, but it supports most. 135 currencies in fact, spanning 231 countries (out of 252). But we have to incorporate that logic into the chatbot so we don’t allow someone to use a currency that isn’t supported.

Stripe’s fee structure also varies depending on the country of the payment card you are using. After contacting Stripe, they kindly allowed me on to their Beta for non-profit fees, which are slightly lower than their normal fees. Here is their non-profit fee structure:

- 1.2% + £0.20 for EU Visa and MasterCard transactions

- 2.9% + £0.20 for non-EU and American Express transactions

So to implement this fee structure, I needed to mark which countries are defined as European cards according to Stripe. This is listed here. I decided not to worry about Europeans using American Express (which charge the higher fees). Instead I suggest to Europeans that they should use a Visa or Mastercard. If I see a large number of Europeans use American Express, I may change this.

Computing Bed Net Costs & Fees in Each Currency

Then I had to take care of the actual costs of a net and fees in the different currencies. And then build a script to update this regularly as currencies fluctuate over time. I used API Layer to get up-to-date values in each currency relative to US Dollar. But I didn’t want prices in a particularly currency to fluctuate too regularly. So I set this script to run weekly and only update a given currency if it is at least 5% higher or lower than the current value.

I then didn’t want prices to be arbitrary values. For example, taking a base cost of $2 for a bed net, converted into GBP today is exactly £1.57. The fee would be £0.22, making the total payment amount of £1.79. These are not nice round numbers. So I built a rounding function that rounds up to the next number that doesn’t seem arbitrary. In this example, the net price would be £1.60, the fee would be £0.25 and the total would then be £1.85. This conveniently adds a slight buffer in case some currencies drop and some payments are slightly less than the cost of a net. Or in case lots of Europeans use American Express. It turns out that this rounding function wasn’t too straight-forward, and it took a bit of time to craft.

Enabling a User to Change Currency

Then there is the process of a user actually changing currency. I put an extra button next to where they would pay (that shows the price) to offer them the opportunity to change currency. This then confirms their current currency and gives them a choice of a few countries to change to.

How did I select which countries go into this list and what the default currency of the user is when they start using the chatbot in the first place? Well, I use a variety of signals including their locale (which Facebook gives me), the locale of the person who invited them and the currency of the card their inviter used when they bought a net. I also considered using the timezone of the user and their inviter (which Facebook also gives me). But inferring currency from timezone was a little more complex. So I’ve put it on my future wishlist if it makes sense to do at any point. I then crafted an algorithm using all of these signals to figure out the default country and country list for currency changes.

Understanding User Input

What if the country the user is in doesn’t exist in this short list of countries? At that point I ask them to type it in. How can I recognise the country from what they type in? For example, someone might type “us of a” when they mean the US.

This was actually surprisingly easy. Conveniently, Facebook bundle in a Natural Language Processing (NLP) library with their platform called Wit.ai. At a click of a button in my Facebook developer console, I now get a whole set of other information with every message a user types in based on the output from Wit.ai’s engine. For example, if a user types in “us of a”, Facebook tells me that it believes it is a “location” with a 86.2% confidence, which has a granularity of a “country” and resolves to the value of “United States of America”. Pretty useful right!? I had to take care of a few edge cases, but it works really well!

I also enabled people to type in a currency code (like “usd” or “gbp”). It will now understand this input and ask if the user wishes to switch to this currency. I had to build a few exceptions like “all” (Albania) and “try” (Turkey) as these are common words a user might type in, where it is likely they don’t mean the currency.

Securely Passing in and Rendering Costs in the Checkout Web Page

And finally, I had to pass the costs (actual amounts with the currency code and rendered amounts) into the Stripe webview when someone enters their payment credentials. A simple way to do this is just to pass all of the values in the URL. But that is more susceptible to hacking. It could also cause people to spoof the page so victims end up paying more than they think they are paying. So the best and safest way to implement this was to build an API (Application Programming Interface) so that the web page could ask my server what the costs are for a given number of nets in a given currency.

An API lets machines talk to each other. In this case, my web page needed to talk to my chatbot server (hosted in different places) in order to get the costs to display to the user. But it turns out that modern-day browsers don’t allow this as it’s a security risk. So this then exposed me to a new technology called Cross-Origin Resource Sharing (CORS), which allows this to happen. Conveniently, Node has a library to make this a bit easier. But it still took a bit of time and fiddling to get this working.

A Summary of the Currency & Fee Work

Ok, so now I can make a good guess as to which country a user is in and what their currency should be. I can parse countries they enter to help them change currencies. There is an updated list of net prices and fees. These are nicely rounded so they don’t appear to be arbitrary values. They only update if they increase or decrease by 5% from the current price. People can only switch to Stripe-supported currencies. I can render all prices in 135 currencies, spanning 231 countries using large and small denominational symbols. And the price is displayed nicely and safely inside the webview where the user enters their card details.

Phew! So that effort took 8 whole days (48 hours) rather the anticipated 1-2 days! It then involved further debugging over the following week or so given the complexity of the code. Was it a waste of time? Well, now I have confidence that it will work for all users on day one if it gets shared beyond the borders of the UK. I’m also confident that the prices and fees will be accurately calculated too. Moreover, I can repurpose all of this code for all future projects that require multi-currency support. Plus it was a fun challenge! So I think I’m comfortable with how I used my time, but I think it’s always important to ask this question.

Other Work on This Iteration

Improving Responses to Messages Users Type

Following further user tests, I dramatically improved the responses my chatbot sent to messages that users typed in at various points in the experience. For example, it used to be that if you typed in a message at the start of the game, it would send duplicate messages. This was very confusing, so I fixed it. I also enabled people to type “mozzie” or “zap”, which would send them another mozzie. If someone types “buy”, it will start the purchase flow (unless they haven’t zapped a certain number of mozzies, which is needed to unlock this part of the game).

In general, my approach will be to see what people actually type in when the chatbot is live and build logic to better take care of these inputs. This is the approach I took with Whack-a-Mole. But I wanted to build some basics up front. And I had the benefit of being able to port over a lot of the relevant logic I had already built for Whack-a-Mole, which is already well optimised.

I reviewed countless messages that people typed into the Whack-a-Mole chatbot over a period of a few months and crafted customised rules and responses to improve the conversational part of the chatbot. At last measure, about 95% of responses to what people type in Whack-a-Mole make sense and could come from a human. I even noticed someone talking to the chatbot for over an hour! So using some of that logic will give me a head start with this chatbot.

A Menu, Error Checking and a New Website

I implemented a menu to let people buy more nets, invite their friends and give feedback. I also built a lot more error checking in case something went wrong or people hacked the payment page to try and buy 1 million nets for £1 for example.

As I now reference my company, Projected Games Ltd, at the start of the chatbot, I figured people might look for the website. The website was quite outdated (I built it in 2005!), so I archived it here. I then spent a couple of days creating a new website to showcase both the Malaria Challenge and Whack-a-Mole chatbots. I also said a little about the company and its history, gave people a way to get in touch and crafted a privacy policy for the chatbots. The new website is here.

Improved Sharing

I provided more options for how users can invite their friends to try out the Malaria Challenge. This came from one of my recent user tests. My friend Jess said that she wouldn’t share this in a 1:1 message with a friend, but might post it on Facebook and Twitter.

So in addition to the existing option of sharing directly with your friends in Messenger, I added an option to share on Facebook and Twitter too. Given how dependent the success of this chatbot lies in users sharing it with their friends, I hope this will turn out to be a high impact feature.

A Bit of Social Pressure

Some of the cool ideas that came out of user testing were around applying some social pressure. For example, letting the user know that their friend who invited them has bought a net as well as 10 of their friends.

This wasn’t too much effort to implement and may make for a more engaging and persuasive experience. So I peppered a few of these facts into the experience if people keep tapping “No” to buying a net. Also, just before asking people how many nets they would like to buy, I mention how many nets their friend who invited them had bought, but only if they bought at least six!

User Testing with an American Audience

A couple of friends of mine are user testing this with American users in California (as I have only user tested this with British users so far). They are the founders of Sparrow, an app that helps people give money to effective charities in an effortless way. I met them through the Effective Altruism community and they have an amazing background working directly for Dan Ariely, a leading behavioural psychologist at Duke University. Dan’s book, Predictably Irrational, is actually one of my top 20 books of all time. So I’m very thankful that two people with such a strong background in user testing and behavioural psychology are user testing my chatbot for me 😊

A Note on Performance and Scaling

And let me share a brief note on performance and scaling.

Fortunately I had some good, but painful experience scaling Whack-a-Mole. At one point early in that project, I was growing by about 400 people every day. But I hadn’t architected the chatbot to cope with high volumes of users and activity. So the game slowed right down, taking up to 10-20 seconds to respond to people. It even starting crashing at times with memory issues.

I spent a couple of months fixing this. It involved indexing my database, refactoring how I did some of my database queries, purging data that is no longer needed from my database (and backing it up to Dropbox so I have a persistent copy if needed) and upgrading my database storage and server performance. Fortunately, this experience has prepared me well to architect this new chatbot in a way that should scale much better.

So I’ve architected how I store data differently. I will be in a position to easily upgrade my storage and server performance when needed. I’ve already indexed my database according to the queries I’m running. My queries are well optimised. And while I haven’t built purging logic yet, this will be straightforward to do if storage space becomes an issue.

My experience with scaling Whack-a-Mole taught me that the bottleneck is usually inefficient database queries.

Costs to Support a Chatbot at Scale

But you might also be thinking that the server and database costs would be expensive with high volumes of traffic. Actually, Whack-a-Mole has only cost me $7 / month in server costs (with Heroku) and $18 / month in database costs (with mLab). So at just $25 (£18) / month, I have been able to support an interaction (i.e. a “Whack”) every 7 seconds 24/7 for the past year and a half. This has equated to over 5 millions whacks in total!

So I don’t expect this Malaria Challenge chatbot to cost much more. If it works and scales, raising millions of pounds, I wouldn’t expect to pay more than £50 / month of server and database costs. These will be my only costs beyond my actual time. And once it’s working well, it won’t take any more of my time either, but will continue to work and harvest donations! That’s the beauty of scalable tech products!

So Where Am I At Now and What’s Next?

After about 200 hours of work, I have a pretty decent chatbot that is ready for some live testing. It may or may not work, but I’m confident that it will raise at least a few thousand pounds and may raise millions. And I know I will learn a lot from a live test, both about chatbots, donor behaviour and many other things that will help with future projects.

Here’s a video of the end-to-end experience of the latest version of the chatbot:

Final Actions with the Against Malaria Foundation

As discussed above, I need to complete a few final actions with the Against Malaria Foundation before going live. Like linking to their bank account. And getting the real CEO to record three videos to replace mine in the chatbot.

Hopefully we’ll complete these early in the new year and we can then launch!

Next Steps After Going Live

Once the chatbot is live, I’ll be eager to observe two aspects:

- Do people stay engaged for seven zaps until they get asked to donate and do they then donate?

- After they donate, do they share with their friends?

In the future, here are a few ways I could make the chatbot more engaging and more impactful in fighting malaria:

- Make sharing with friends more engaging. For example, enable people to record videos of themselves telling their friends why they should buy a bed net. Then show these videos to their friends within the chatbot experience (a friend came up with this idea during a user test).

- Connect donations to distributions, so that people can see the specific village or even people who they are buying nets for.

- Up-sell people to be regular givers for the Against Malaria Foundation, which will have a much bigger impact long-term. This could be done by making a more engaging, long-term chatbot experience.

But my next steps will be primarily driven by observing user behaviour and listening to feedback.

Coming Soon!

So hopefully I’ll be able to share the live chatbot with you soon. If you haven’t already, subscribe to my blog below and you’ll be the first to know when it’s live! … which might also give you an advantage to get higher up the Malaria Challenge leaderboard! 😉

In the meantime, I’ll probably start a new project in the new year.

Webmentions

[…] towards the start of January, with the Malaria Challenge chatbot work concluded, I started on this project. We decided that building a working product would […]